Abstract

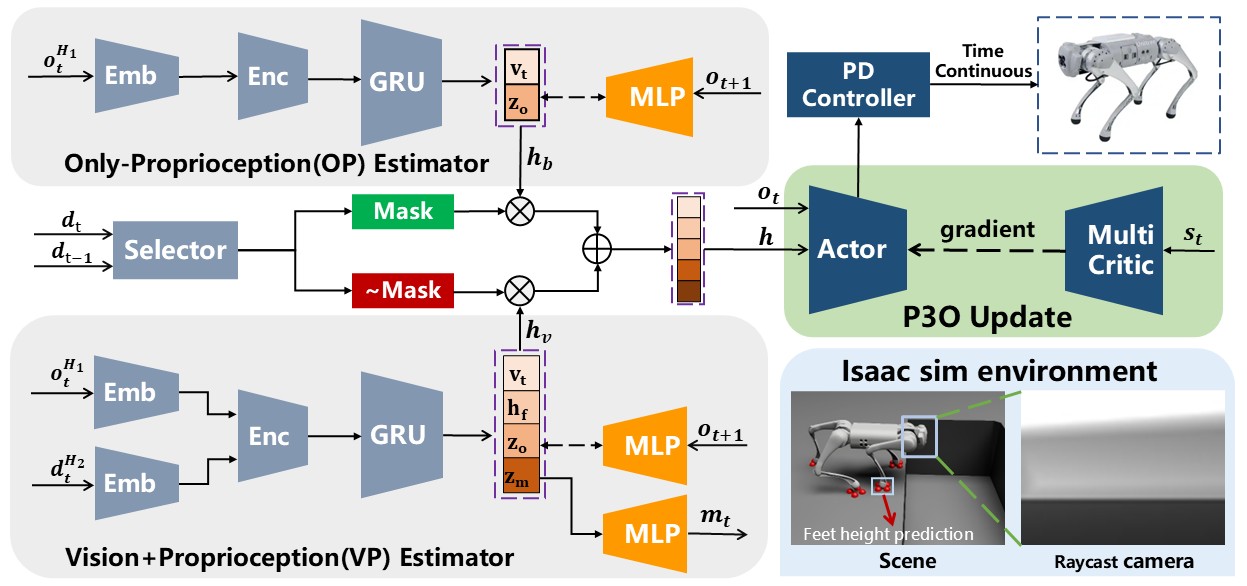

Vision-based locomotion in outdoor environments presents significant challenges for quadruped robots. Accurate environmental prediction and effective handling of depth sensor noise during real-world deployment remain difficult, severely restricting the outdoor applications of such algorithms. To address these deployment challenges in vision-based motion control, this letter proposes the Redundant Estimator Network (RENet) framework. The framework employs a dual-estimator architecture that ensures robust motion performance while maintaining deployment stability during onboard vision failures. Through an online estimator adaptation, our method enables seamless transitions between estimation modules when handling visual perception uncertainties. Experimental validation through real-world robot demonstrates the framework's effectiveness in complex outdoor environments, showing particular advantages in scenarios with degraded visual perception. This framework demonstrates its potential as a practical solution for reliable robotic deployment in challenging field conditions.

Training in Isaac Sim

Locomotion Ability

Stable Control under Visual Collapse

Robustness Locomotion under visual deception

Failure Cases

Without image clipping and raycaster randomization, the robot can not predict correctly.

Without zero commands training, it's hard to control the velocity and the robot lacks of robustness.

If using only vision-based estimator, the robot can not walk normally under heavy visual interference.

BibTeX

@ARTICLE{11155164,

author={Zhang, Yueqi and Qian, Quancheng and Hou, Taixian and Zhai, Peng and Wei, Xiaoyi and Hu, Kangmai and Yi, Jiafu and Zhang, Lihua},

journal={IEEE Robotics and Automation Letters},

title={RENet: Fault-Tolerant Motion Control for Quadruped Robots via Redundant Estimator Networks under Visual Collapse},

year={2025},

volume={},

number={},

pages={1-8},

keywords={Legged Robots;Reinforcement Learning},

doi={10.1109/LRA.2025.3608633}}